EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USTCP Optimization

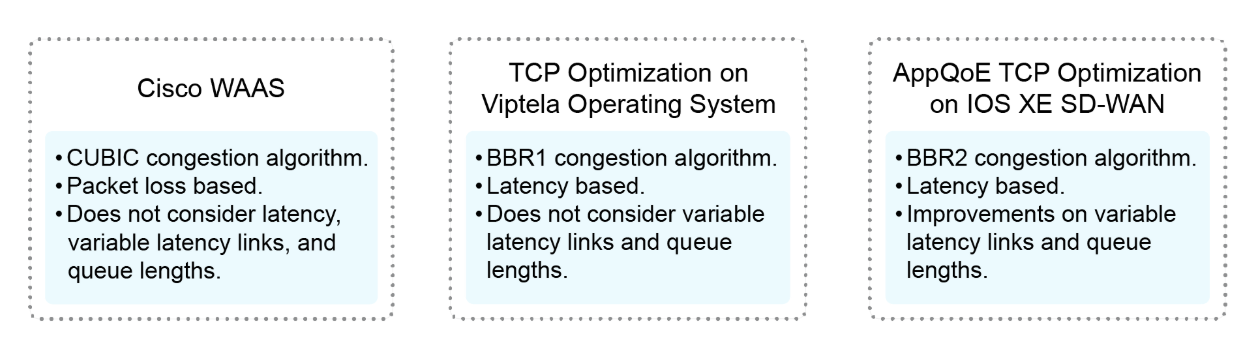

TCP optimization is supported in the Viptela operating system, Cisco WAAS, and AppQoE for IOS XE routers. All three platforms support TCP optimization, but there are differences in their implementations.

In addition to guaranteed packet delivery, TCP incorporates flow control, which determines the transmission speed based on link congestion. Cisco WAAS employs the CUBIC congestion algorithm developed by Google to calculate the sending rate. This algorithm suits traditional loss-based networks, where packet loss is crucial in determining the transmission rate.

However, Google introduced BBR as a new congestion control mechanism that uses latency instead of packet loss to determine the sending rate. BBR1 and BBR2 are two versions of BBR. The Viptela operating system uses BBR1, which outperforms CUBIC, providing improved throughput and reduced latency. Nevertheless, BBR1 has limitations in handling different link latencies and queue lengths.

AppQoE adopts BBR2, an enhanced version of BBR1, which considers variable latency links and queue lengths while reducing retransmissions. Recall that AppQoE also incorporates features such as FEC, packet duplication, and DRE or LZ, further enhancing the overall application experience for on-premises or cloud-based deployments.

TCP optimization improves the processing of TCP data traffic to reduce round-trip latency and increase throughput.

If you want to improve the performance of TCP traffic, optimizing it can be beneficial, especially for long-distance connections like those found on transcontinental links and very-small-aperture terminal (VSAT) satellite communication systems. Additionally, optimizing TCP traffic can enhance the performance of SaaS applications.

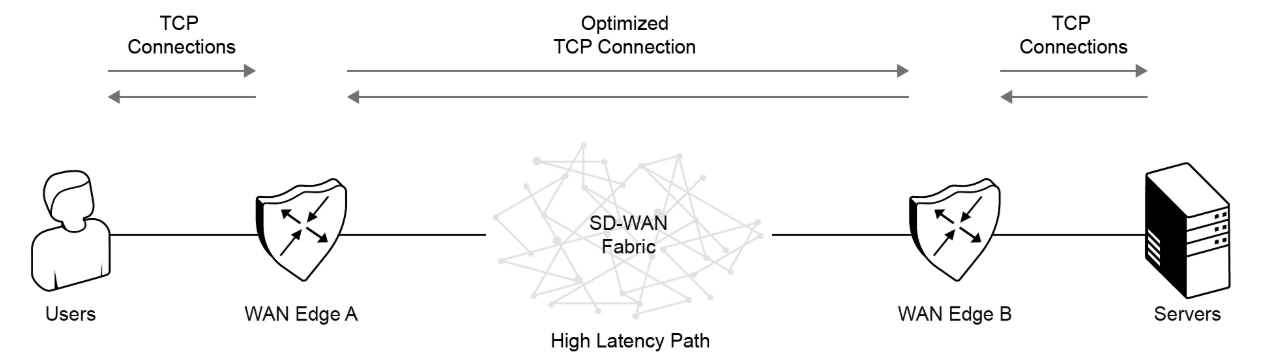

TCP optimization involves a router serving as a TCP proxy between a client starting a TCP flow and a server waiting for the flow.

In the figure, two routers act as proxies: WAN Edge A as the client proxy and WAN Edge B as the server proxy. With TCP optimization on, WAN Edge A terminates the client's TCP connection and establishes a new one with WAN Edge B, which then connects to the server. Buffer caching is used to maintain TCP traffic and prevent timeouts.

The general recommendation is to configure TCP optimization on client-proximal and server-proximal routers. This configuration, known as a dual-ended proxy, helps to ensure optimal performance. Although it is possible to configure TCP optimization only on the client-proximal router, referred to as a single-ended proxy, Cisco does not recommend this approach because it compromises the TCP optimization process. TCP is a bidirectional protocol, so it relies on a timely acknowledgment of connection-initiation synchronization (SYN) messages through acknowledgment (ACK) messages for proper operation.

To use TCP optimization, first enable the feature on the router, then define which TCP traffic to optimize.

Topology and Roles

To implement TCP optimization, the concept of service node and controller is used. The service node is a daemon that runs on the WAN Edge device, and it is responsible for the actual optimization of TCP flows. The controller is known as the AppNav Controller and is responsible for traffic selection and transport to and from the service node. The service node and the controller can run on the same router or separate routers. AppNav is covered in more detail in a later topic.

LEAVE A COMMENT

Please login here to comment.