EMAIL SUPPORT

dclessons@dclessons.comLOCATION

USInter-Pod Network

IPN must support several specific functionalities to perform those connectivity functions, such as:

- Multicast support (PIM Bidir with at least /15 subnet mask), which is needed to handle Layer 2 broadcast, unknown unicast, and multicast (BUM) traffic.

- Dynamic Host Configuration Protocol (DHCP) relay support.

- Open Shortest Path First (OSPF) support between spine and routers.

- Increased maximum transmission unit (MTU) support to handle the Virtual Extensible LAN (VXLAN) encapsulated traffic.

- Quality of service (QoS) considerations for consistent QoS policy deployment across pods.

- Routed subinterface support, since the use of subinterfaces on the IPN devices is mandatory for the connections toward the spine switches (the traffic originated from the spine switches interface is always tagged with an 802.1Q VLAN 4 value).

- LLDP must be enabled on the IPN device.

Multicast Support

Besides the unicast communication, the east-west traffic in the Cisco Multi-Pod solution contains Layer 2 multi-destination flows belonging to bridge domains that are extended across pods. This type of traffic is referred to as BUM, which is encapsulated into a VXLAN multicast frame and transmitted to all the local leaf nodes inside the pod.

For this purpose, a unique multicast group is associated to each defined bridge domain, using the name of Bridge Domain Group IP–outer (BD GIPo). This multicast group is dynamically picked from the multicast range configured during the initial startup script of APIC.

To flood the BUM traffic across pods, the same multicast group that is used inside the pod is also extended through the IPN network. Those multicast groups should work in Bidirectional Protocol Independent Multicast (PIM Bidir) mode and must be dedicated to this function (such as, not used for other purposes, applications, and so on). Layer 3 multicast (VRF GIPo) is also forwarded over the IPN.

The main reasons for using PIM Bidir in the IPN network are:

-

Scalability: Since BUM traffic can be originated by all the leaf nodes that are deployed across pods, the use of a different PIM protocol would result in the creation of multiple individual (S, G) entries on the IPN devices that may exceed the specific platform capabilities. An example of a PIM protocol is PIM any-source multicast (ASM). With PIM Bidir, a single (*, G) entry must be created for a given bridge domain, independently from the overall number of leaf nodes.

-

No requirement for data-driven multicast state creation: The (*, G) entries are created in the IPN devices when a bridge domain is activated in the ACI Multi-Pod fabric, independently from the fact there is an actual need to forward BUM traffic across pods for that given bridge domain. The implication is that when the need to do so arises, the network will be ready to perform those duties, avoiding longer convergence time for the application caused. An example is in PIM ASM by the data-driven state creation.

DHCP Relay Support

The IPN must support DHCP relay, which enables you to auto-provision configuration for all the Cisco ACI devices deployed in remote pods. The devices can join the Cisco ACI Multi-Pod fabric with zero-touch configuration (similar to a single pod fabric).

Therefore, the IPN devices connected to the spine switch of the remote pod need to be able to relay DHCP requests generated from a new starting Cisco ACI spine toward the Cisco APIC nodes.

Cisco APICs use the first pod TEP address range regardless of which pod it's physically connected to.

OSPF Support

OSPFv2 is the only routing protocol (in addition to static routing) supported for connectivity between the IPN and the spine switches. It is used to advertise the TEP address range to other pods. Therefore, the routers that are used for IPN have to exchange routing information with the connected Cisco ACI spine switches using OSPF routing protocol.

Increased MTU Support

The IPN must support increased MTU on its physical connections, so the VXLAN data-plane traffic can be exchanged between pods without fragmentation and reassembly, which slows down the communication. Before Cisco ACI Release 2.2, the spine nodes were hardcoded to generate 9150 bytes full-size frames for exchanging MP-BGP control plane traffic with spine switches in remote pods. This mandated support for that 9150 bytes MTU size on all the Layer 3 interfaces of the IPN devices.

From Cisco ACI Release 2.2, a global configuration knob has been added on Cisco APIC to allow proper tuning of the MTU size of all the control plane packets generated by ACI nodes (leaf and spine switches), including inter-pod MP-BGP control plane traffic.

Hence, it is generally recommended to support 9150 bytes MTU throughout the IPN.

QoS Considerations

Since the IPN is not under Cisco APIC management and may modify the 802.1p (class of service [CoS]) priority settings, you need to take additional steps to guarantee the QoS priority in a Cisco ACI Multi-Pod topology.

When the packet leaves the spine switch in one pod towards the IPN, the outer header of the packet has CoS value to keep the prioritization of variety of traffic across pods. However, IPN devices may not preserve the CoS setting in 802.1p frames during transport. Therefore, when the frame reaches the other pod, it may lack the CoS information that is assigned at the source in the first pod.

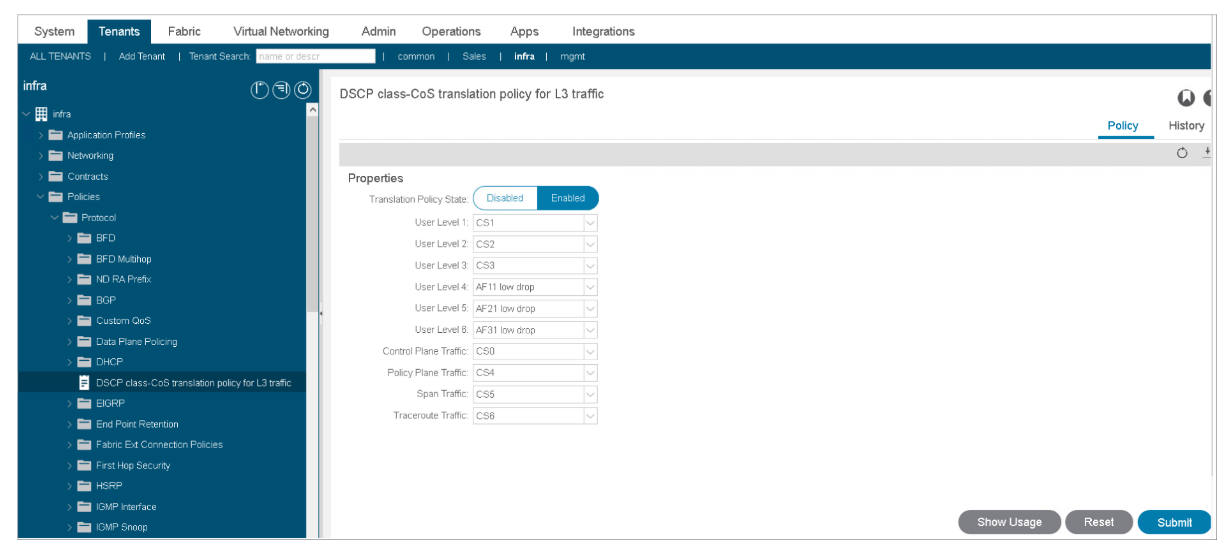

To preserve the 802.1p frame information in the Cisco ACI Multi-Pod topology, you have to configure on APIC a differentiated services code point (DSCP) policy to preserve the QoS priority settings while mapping CoS to DSCP levels for different traffic types. You also have to ensure that IPN devices will not overwrite the DSCP markings, so the IPN will not change the configured levels. With a DSCP policy enabled, Cisco APIC converts the CoS level in the outer 802.1p header to a DSCP level in the outer IP header and frames leave the pod according to the configured mappings. When they reach the second pod, the mapped DSCP level is mapped back to the original CoS level, so the QoS priority settings are preserved.

The following examples show a CoS-to-DSCP mapping that is configured on the APIC using the Tenant > infra > Policies > Protocol > DSCP class-cos translation policy for Layer 3 traffic, which modifies the default behavior. Hence, when traffic is received on the spine nodes of a remote pod, it is reassigned to its proper class of service before being injected into the pod based on the DSCP value in the outer IP header of inter-pod VXLAN traffic.

IPN Control Plane

IPN Control Plane

Since the IPN represents an extension of the Cisco ACI infrastructure network, it must ensure that the VXLAN tunnels can be established across pods for endpoint communication.

During the auto-provisioning process for the nodes belonging to a pod, the APIC assigns one (or more) IP addresses to the loopback interfaces of the leaf and spine nodes part of the pod. All those IP addresses are part of an IP pool that is specified during the bootup process of the first APIC node and takes the name of "TEP pool." The IPN should facilitate communication between the local TEPs and the remote TEPs.

LEAVE A COMMENT

Please login here to comment.